Applicant

Prof. Dr. Bernd Bischl

Institut für Statistik

Arbeitsgruppe “Statistical Learning and Data Science”

Ludwig-Maximilians-Universität München

Project Summary

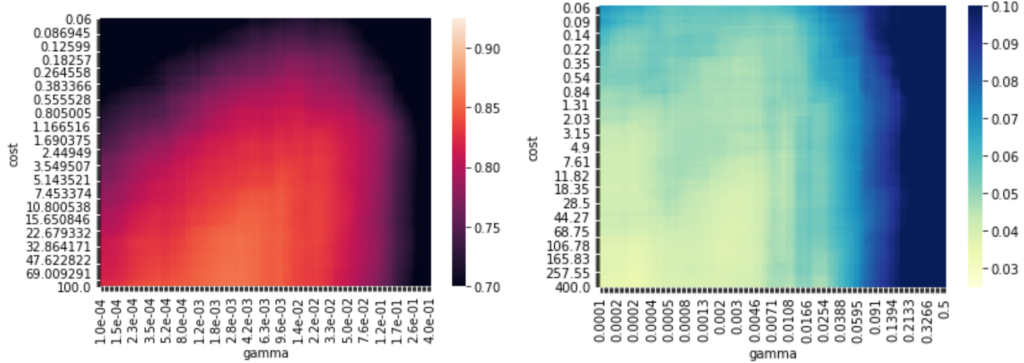

Machine learning methods have proven to be useful tools in an increasing number of scientific and practical disciplines. However, the efficient application of such methods is often still difficult for inexperienced users. This stems, in part, from the many possible algorithm configuration options or “hyperparameters” that are available for such methods and that greatly impact their effectiveness – even more so because the best choice of hyperparameters depends on the given problem and data set. Even experienced specialists may find selecting the best machine learning method and its hyperparameter values difficult, since the relationship between the data set and the optimal approach is often not directly predictable and an understanding of the underlying algorithms is lacking.

Automated Machine Learning or AutoML is an emerging field with the ultimate goal of making machine learning more accessible to users by automatically making some of the necessary decisions for the user. One approach to AutoML, based on the principles of “meta learning”, uses experience from previous machine learning experiments to quickly and robustly find hyperparameter configurations for the machine learning methods being used. A simple approach here is to try configurations that have worked well in the past; a more indirect way is to configure optimization algorithms in a way that they would have worked well on the problems already studied.

The goal of the project is to build a large collection of data on the relationship of hyperparameter configurations and dataset properties with machine learning performance, thereby providing a rich pool of “experience” that AutoML tools can draw upon to arrive at good solutions more quickly. In order to do so, random hyperparameter configurations of seven different machine learning methods were evaluated on 119 datasets. The SuperMUC NG supercomputer was utilized to achieve a truly large number of evaluations; the experiment is described in more detail in our paper.

The knowledge and data gained from the project will be used to create datasets other researchers can draw upon to investigate algorithm hyperparameters, and to extend existing AutoML approaches with a large collection of prior knowledge. In addition to predictive performance, several aspects regarding the multi-fidelity behaviour of algorithms were collected, such as performance on subsets of the datasets, their runtime and memory consumption and performance within each fold of the cross-validation process. This enables a quantitative trade-off between runtime and predictive performance in further research.